Running AI Models Locally: Harnessing Language Models for Privacy

Written on

Chapter 1: Introduction to Local AI Models

AI language models (LMs) such as Bard, GPT-3, and LaMDA have transformed our interactions with technology. These models are capable of generating text, translating languages, creating diverse types of content, and providing informative answers to inquiries.

Despite their capabilities, utilizing these models via cloud services can become costly and requires a reliable internet connection. Moreover, concerns about data privacy and security often accompany cloud usage.

Running AI models locally presents a viable alternative. By executing an AI model on your personal computer, you maintain complete control over your data, avoiding cloud fees and eliminating the need for internet access.

This guide will delve into the benefits of running AI models locally and outline the fundamental steps involved in this process. We will also share resources to facilitate your journey.

Advantages of Local AI Models

- Privacy and Security: Your data remains on your device, minimizing the risk of interception or misuse.

- Cost-Effectiveness: Bypassing cloud services can lead to significant savings over time.

- Offline Accessibility: Local models can be used without an internet connection, making them especially useful in areas with limited connectivity.

- Full Control: You have complete autonomy over the model and its applications.

Basic Steps to Run an AI Model Locally

- Select a Model: Numerous AI models exist, each with distinct advantages and disadvantages. Choose one that aligns with your requirements.

- Download the Model: After selecting a model, download it from the internet.

- Install the Model: The installation process will differ based on the chosen model.

- Execute the Model: Once installed, you can begin using it.

Next, we'll outline a straightforward process for completing these steps. However, it is essential first to understand the technical prerequisites.

Technical Requirements for Local AI Models

The specifications needed to run AI models locally can vary based on the model and project complexity. Nonetheless, some general requirements include:

- Hardware:

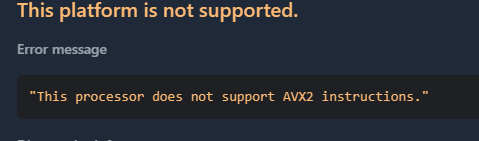

- Processor: An Intel Core i7 or higher, or an AMD Ryzen 7 or above (ensure AVX2 instruction set support).

- RAM: A minimum of 16 GB is recommended.

- Graphics Card: Sufficient RAM in your video card is crucial for loading models.

- Storage Space: The required storage will depend on the model’s size; some may need several gigabytes.

LM Studio: Your Tool for Local AI Modeling

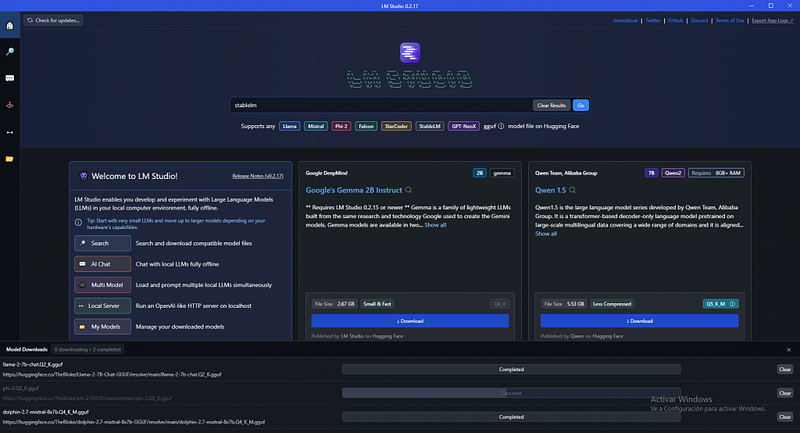

LM Studio is a free, open-source application that allows users to run AI language models directly on their computers. This tool eliminates the need for internet access and mitigates privacy concerns.

Steps to Utilize LM Studio:

- Download and Install LM Studio:

- Click the “Download” button and choose the version that suits your operating system.

- Follow the installation instructions provided.

- Download an AI Model:

- LM Studio offers a variety of AI models. Find the available models in the “Models” tab.

- Click the “Download” button next to your desired model, which will save it to your computer.

- Run the AI Model:

- Open LM Studio.

- Navigate to the “Models” tab.

- Select the model you wish to run and click the “Run” button.

- A new window will appear for you to interact with the model.

- Configure the AI Model:

- LM Studio allows for various configurations, such as adjusting temperature and response length.

- Access these settings by clicking the “Settings” button and experiment with different options for optimal results.

As you progress, understanding the model’s size is crucial, not just for downloading but also for loading it into memory. Ideally, models should utilize the GPU’s RAM, and larger models can take up significant space. If size is a concern, consider exploring reduced-order models with various quantizations.

Conclusion

Thank you for engaging with our discussion on running AI models locally. We hope this information has been both insightful and useful. If you found this content valuable, consider following us for future updates.

We invite you to leave comments with any questions or suggestions you may have. Subscribing for email notifications will ensure you stay informed about our latest articles. Your support is greatly appreciated!