Building Modern ELT Pipelines in Snowflake: A Comprehensive Guide

Written on

Chapter 1: Understanding the ELT Approach

In contemporary data management, many organizations are opting for an ELT (Extract, Load, Transform) methodology rather than the traditional ETL (Extract, Transform, Load) process. This article elaborates on how this modern strategy can be effectively executed within Snowflake, utilizing SQL and Tasks.

To provide some context, let’s revisit the definitions and benefits of Data Lakehouses and the ELT methodology.

Section 1.1: The Data Lakehouse Concept

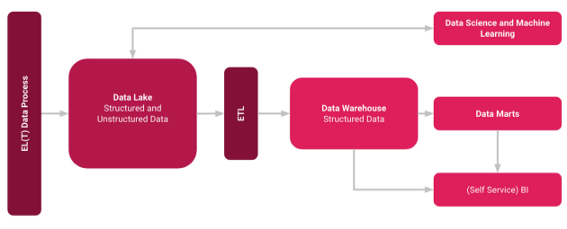

The ELT approach is particularly beneficial when dealing with large datasets, as it minimizes the computational load during data transformation. Instead of distributing data during transport, it can be stored in its raw form in a Data Lake. From this point, the data can be transformed for various applications, such as Self-Service BI and Machine Learning. This method exemplifies a typical ELT strategy.

The Data Lakehouse combines the strengths of both Data Lakes and Data Warehouses into a unified framework. For a deeper understanding of the Data Lakehouse concept, refer to further resources.

Section 1.2: What Are Tasks in Snowflake?

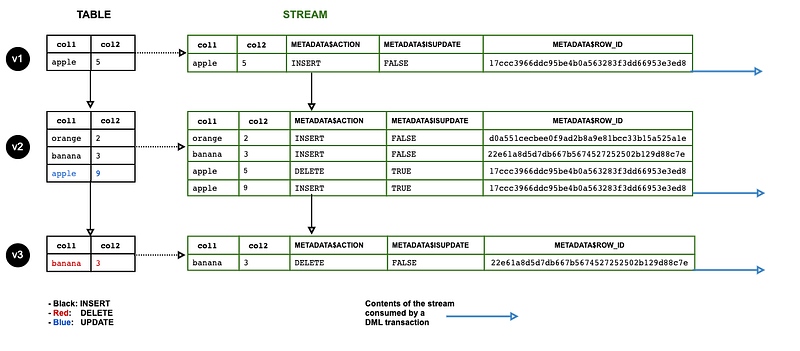

Tasks in Snowflake serve multiple purposes, such as executing a single SQL statement, calling a stored procedure, or utilizing procedural logic via Snowflake Scripting. Tasks can be integrated with table streams to create continuous ELT workflows, ensuring that only the most recently modified data is processed.

This functionality allows for the straightforward creation of tasks using SQL, which can be triggered based on conditions, events, or timers. Below is a sample blueprint for creating a task in Snowflake SQL. You can experiment with this using the Snowflake Free Tier. Ensure that you first create and select a database in which you have permission to establish these tasks.

--CREATE DATABASE test

--if you are using the free tier create a test database first and select it

—Create Task

CREATE TASK t1

SCHEDULE = '5 MINUTE'

USER_TASK_MANAGED_INITIAL_WAREHOUSE_SIZE = ‘XSMALL’

AS

SELECT CURRENT_TIMESTAMP;

This task will execute every five minutes, serving as a fundamental example. However, more complex operations involving new tables, updates, and various functions can also be scheduled.

Chapter 2: Summary and Further Exploration

Snowflake provides Data Engineers with robust tools for implementing ELT workflows through streams, tasks, and SQL. Streams capture data changes in tables, which can then be utilized by tasks for various data processing needs. This approach offers a scalable and efficient means of transformation. For those interested in expanding their knowledge, the following articles may also prove beneficial:

Video Description: This video demonstrates how to build end-to-end data pipelines using Snowflake, highlighting essential techniques and best practices.

Video Description: Join this coding session to learn how to build an ELT pipeline in just one hour using dbt, Snowflake, and Airflow.

Sources and Further Reading

[1] Snowflake, Introduction to Tasks (2022)

[2] Snowflake, ELT Data Pipelining in Snowflake Data Warehouse — using Streams and Tasks (2020)